Behind the scenes: 10 million facebook fans

Table of Contents

It’s been forever since the last update so i figured i’d write something. Here’s a behind the scenes look at the recently launched 10 million fans celebration for adidas Originals, developed by Sid Lee Amsterdam.

The Brief #

adidas Originals will hit 10 million fans on their Facebook page soon. Do something to thank these people for being part of the community. Or something in that direction, i’m paraphrasing.

The Idea #

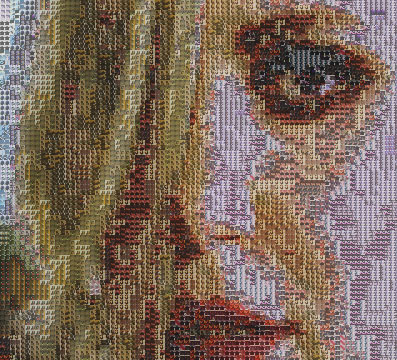

After numerous rounds of concepting with the team we come up with the idea of celebrating the fans instead the celebrities. After all; they’re the ones who made this happen. The execution of this was to create a short video that includes the fans in an interactive video mosaic style piece. The video gets overlaid with the fans’ profile pictures to build the picture. This came from an earlier internal prototype that we were able to apply to a client project.

Making it work #

We wanted to have good reach with this and specifically target the people on the adidas Originals page on Facebook. Because of this there wasn’t going to be a destination but instead push the video directly in the stream using the OpenGraph meta tags and build a custom video player. People on Facebook would see the video in the same way they see an embedded YouTube video, but they could also interact with it. In addition it wouldn’t be static but instead in real time pull in people who are fans.

Getting user ids #

Thanks to the OpenGraph API it’s super easy to get information about people.

All you need is the user id. Turns out there’s an open bug report in the

Facebook bug tracker that prevents us from getting the people who are fans of a

page. This worked before but there hasn’t been a response from Facebook since

early December.. So, we couldn’t rely on this to get ids. We could screenscrape

the insights page but this is against Facebook’s Terms of Service. Of course we

could hardcode a list of ids but we didn’t want to do that. Instead the

approach was to get a list of recent posts

(https://graph.facebook.com/adidasoriginals/posts?limit=1000) and then query

those posts (https://graph.facebook.com/{ postId }/likes&limit=1000) for

people who like them. With this we were able to get a whole list of people who

are the most active fans and interact with the content. We could get thousands

and thousands of ids this way and it wasn’t against Facebook’s terms of

service. In addition we get some names that add some extra spice. Perfect!

A shitload of images #

With the user ids getting the profile pictures is as simple as loading

http://graph.facebook.com/{ user id }/picture. Facebook does the rest. So we

load the profile pictures of the users. When the images have been loaded

they’re scaled down to a 3x3px images, and the middle pixel is measured to get

the average color of the image. This average color is then converted from HEX

to HSV and all the data is stored in a value object in a Vector together with

the original image, preprocessed (scaled down) images and some other details

for quick & easy access. In addition the images that were going to be used on

the grid were desaturated slightly and the contrast was turned down a bit to

make them stand out a bit less.

Playing the video #

The video is scaled down to a size where each pixel would respond to a profile picture. So with 5px images we scale down the video to 20%. The idea then would be to loop through each pixel of each frame of the video and replace that pixel with the closest match. These images are drawn to another BitmapData that is displayed. Seems easy enough…

Mapping color to picture #

Let’s say we measure the the pixel at 0, 0 of the video and get a value of #006282. Which one is the closest match to this? As it’s highly unlikely an image would have the exact same average color we would need a lookup table that doesn’t require exact matches but instead finds the closest match. Also this had to be fast—super fast—to allow realtime playback. Looping through a vector of images to get their average color, calculating the distance to the input color and then sorting by distance seems like an obvious solution but is far too slow to process 6000+ images per frame. Instead we had to create a lookup map with some fuzzy logic to even out the images that don’t match perfectly…

Solution 1: #

A Vector as a lookup table doesn’t really work as it requires exact matches so using a BitmapData seemed like a better solution. If we put consider the color a two dimensional object (x: hue, y: value) we can plot the input pictures out on an image. The previously mentioned #006282 has a HSV value of (Hue: 195°, Saturation: 100%, Value: 51%) so it would end up at x:195 y:51. The problem here though is that we end up with a lot of empty spots as not every single color is covered. We need support for these too, somehow…

A Voronoi diagram is commonly used for things like finding the closest radio tower to a cellphone. Kinda similar to what we’re doing here, right? We calculate a voronoi diagram based on the x, y coordinates mentioned above and each zone gets colored with an index to the picture. The result is rendered to a BitmapData. Then when we need to find the closest match to a picture we calculate the hue and value of it, and use that as x & y on a getPixel() on the lookup BitmapData. The result is a color that is the index to the image. Seems easy enough? 😃 Applying Lloyd’s algorithm a couple times to space out the data a bit helped the result even more. This allowed a match to be a bit off by either hue or value and the closest match would still be returned. This would support any number of images too, the closest one is always returned. Pefect!

The problem here though became performance. Even with the fastest (AS3 only) Voronoi algorithms calculating the diagram for the input images (1000+) was too slow and caused a hiccup after the load. In addition the algorithm didn’t scale that well as adding a new image exponentially increased the processing time. This is a bad user experience and we started looking for another solution (even if it’s a couple seconds on unresponsiveness). Sooo, killing the darling that had caused a ‘heureka!’ moment earlier… 🙁

Solution 2: #

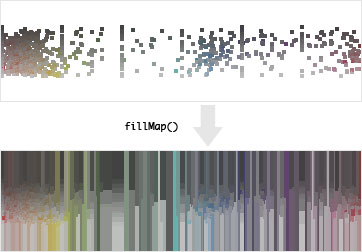

While a Voronoi diagram might be a fairly accurate way of finding the closest image speed was more important than accuracy here. The idea of using a BitmapData as a lookup table with hue and value mapped to X and Y still seemed like a good idea. Instead of calculating a voronoi diagram though we directly plot the colors (at 3x3px) to a BitmapData and after completion expand each zone to fill out the gaps. This is a ghetto solution but works much faster than a voronoi diagram and does the job. Judging by the visual result of both you couldn’t tell which approach was used.

Below you see an image before and after where the color was plotted to imageColorMap and then expanded. Seems pretty smooth. The imageLookupMap was used for the index.

Nothing special going on here but code for the curious:

private function fillMap( ) : void {

var col : int = 0;

var row : int = 0;

var cols : int = 360; // degrees of hue

var rows : int = 100; // values (percentage)

var offset : int;

var indexes : Vector;

var colors : Vector;

var outputColors : Vector;

var iRow : int;

var iCol : int;

var iColor : int;

var oColor : int;

var overTop : Boolean;

var overBottom : Boolean;

var matchedTop : Boolean;

var matchedBottom : Boolean;

var overLeft : Boolean;

var overRight : Boolean;

var matchedLeft : Boolean;

var matchedRight : Boolean;

var i : int;

var n : int;

var color : int;

for ( col = 0; col < cols; col++ ) {

indexes = new Vector( );

colors = new Vector( );

outputColors = new Vector( );

for ( row = 0; row < rows; row++ ) {

color = imageLookupMap.getPixel( col, row );

if ( color != 0xFFFFFF && row > 0 && row < rows - 1 ) {

indexes.push( row );

colors.push( color );

outputColors.push( imageColorMap.getPixel( col, row ) );

}

}

// Fill gaps vertically

offset = 1;

while ( indexes.length > 0 ) {

n = indexes.length;

for ( i = 0; i < n; i++ ) {

iRow = indexes[ i ];

iColor = colors[ i ];

oColor = outputColors[ i ];

overTop = iRow - offset < 0;

overBottom = iRow + offset > rows – 1;

matchedTop = false;

matchedBottom = false;

if ( !overTop && imageLookupMap.getPixel( col, iRow – offset ) == 0xFFFFFF ) {

imageLookupMap.setPixel( col, iRow – offset, iColor );

imageColorMap.setPixel( col, iRow – offset, oColor );

matchedTop = true;

}

if ( !overBottom && imageLookupMap.getPixel( col, iRow + offset ) == 0xFFFFFF ) {

imageLookupMap.setPixel( col, iRow + offset, iColor );

imageColorMap.setPixel( col, iRow + offset, oColor );

matchedBottom = true;

}

if ( ( overTop && overBottom ) || ( !matchedTop && !matchedBottom ) ) {

indexes.splice( i, 1 );

colors.splice( i, 1 );

outputColors.splice( i, 1 );

i–;

n–;

}

}

offset++;

}

}

// Fill gaps horizontally

for ( row = 0; row < rows; row++ ) {

indexes = new Vector( );

colors = new Vector( );

outputColors = new Vector( );

for ( col = 0; col < cols; col++ ) {

color = imageLookupMap.getPixel( col, row );

if ( color != 0xFFFFFF && col > 0 && col < cols - 1 ) {

indexes.push( col );

colors.push( color );

outputColors.push( imageColorMap.getPixel( col, row ) );

}

}

// Fill gaps vertically

offset = 1;

while ( indexes.length > 0 ) {

n = indexes.length;

for ( i = 0; i < n; i++ ) {

iCol = indexes[ i ];

iColor = colors[ i ];

oColor = outputColors[ i ];

overLeft = iCol - offset < 0;

overRight = iCol + offset > cols – 1;

matchedLeft = false;

matchedRight = false;

if ( !overLeft && imageLookupMap.getPixel( iCol – offset, row ) == 0xFFFFFF ) {

imageLookupMap.setPixel( iCol – offset, row, iColor );

imageColorMap.setPixel( iCol – offset, row, oColor );

matchedLeft = true;

}

if ( !overRight && imageLookupMap.getPixel( iCol + offset, row ) == 0xFFFFFF ) {

imageLookupMap.setPixel( iCol + offset, row, iColor );

imageColorMap.setPixel( iCol + offset, row, oColor );

matchedRight = true;

}

if ( ( overLeft && overRight ) || ( !matchedLeft && !matchedRight ) ) {

indexes.splice( i, 1 );

colors.splice( i, 1 );

outputColors.splice( i, 1 );

i–;

n–;

}

}

offset++;

}

}

}

Now we have a 360x100px BitmapData (imageLookupMap) that looks kinda weird but doing a getPixel on it returns a color value that is then used as an index to read out an image from a Vector. Boom. Super quick and scalable!

Putting it all together #

After this it’s quite simple. We loop through each pixel of the video, query the lookup table for an index and then copy the pixels from that image to an output BitmapData. For this project we also connected the mosaic to the spectrum of the music in the video. After this is completed the original video that was stretched down is stretched up again and drawn on top with BlendMode.OVERLAY at a low alpha to cheat a bit and give a better visual effect. After some optimization of the analysis functions it runs at around 40-50fps with 6000 images. Everybody’s happy 🙂

Hope this sparks somebody’s interest to do something similar 🙂